Streaming video with FastAPI

We will briefly discuss various options of streaming video over the internet and see a simple streaming video example using FastAPI that will show the video in the browser using the HTML5 video tag.

Video streaming

There are three main options when streaming videos online:

- Streaming video using the HTTP protocol that is natively supported by the browser's

<video>tag. - Streaming video using WebSockets. I have written before about real-time data streaming using FastAPI and WebSockets. The problem is that the

<video>tag doesn't natively support it at the moment, so displaying the video and playing the sound has to be implemented from scratch. There are some examples online that use the<canvas>element to render the video, however making it all work with the audio is probably not worth the effort for most people. - Streaming video using the WebRTC. The advantage of WebRTC is that it is a peer to peer protocol, so the video/audio data stream can be transmitted directly from a client to another client, without the use of the server. The connection however needs to be established by other means, e.g. using WebSockets.

Today I will show you a simple video streaming example using the HTTP protocol, since we don't have to implement anything on the frontend ourselves. The server-side code is pretty simple too. It is also not really tied to FastAPI, so it can be easily ported to Django or other framework.

The code for this article can be found in the stribny/fastapi-video repo.

Streaming video over HTTP

Let's start with the frontend part, which is going to be just a <video> tag:

<!DOCTYPE html>

<html>

<head>

<title>FastAPI video streaming</title>

</head>

<body>

<video width="1200" controls muted="muted">

<source src="http://localhost:8000/video" type="video/mp4" />

</video>

</body>

</html>The only necessary thing is to specify the path to the video file or stream and describe the video format. In this case the video will be loaded from the /video URL as video/mp4. Multiple <source> tags can be used as well to offer multiple streams with different file formats and video encodings.

Optionally we can specify various attributes to show/hide the controls in the video, specify the element's width or decide if the video playback should start muted.

As far as frontend is concerned, we are done.

Streaming video with FastAPI

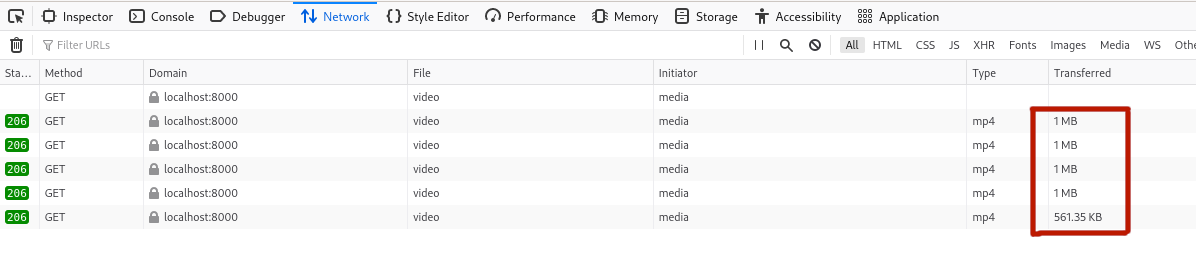

There is a simple mechanism that allows browsers to ask for a specific part of the video stream. When requesting the data for the video tag, browsers send an HTTP header called range that specify the requested range in number of bytes, in the format bytes=1024000,2048000. We can then leverage this information to send only this specific part of the video file. If the browser needs to load another part of the stream, it will ask for it automatically until the whole stream is consumed.

The response needs to have a couple of headers as well for this to work. The size of the data being sent in the response is indicated with Content-Length, the overall size of the file or stream with Content-Range, the content type with Content-Type and finally Accept-Ranges header that tells the browser that byte ranges are supported.

The implementation code is fairly simple once we know what to do:

from pathlib import Path

from fastapi import FastAPI

from fastapi import Request, Response

from fastapi import Header

from fastapi.templating import Jinja2Templates

app = FastAPI()

templates = Jinja2Templates(directory="templates")

CHUNK_SIZE = 1024*1024

video_path = Path("video.mp4")

@app.get("/")

async def read_root(request: Request):

return templates.TemplateResponse("index.htm", context={"request": request})

@app.get("/video")

async def video_endpoint(range: str = Header(None)):

start, end = range.replace("bytes=", "").split("-")

start = int(start)

end = int(end) if end else start + CHUNK_SIZE

with open(video_path, "rb") as video:

video.seek(start)

data = video.read(end - start)

filesize = str(video_path.stat().st_size)

headers = {

'Content-Range': f'bytes {str(start)}-{str(end)}/{filesize}',

'Accept-Ranges': 'bytes'

}

return Response(data, status_code=206, headers=headers, media_type="video/mp4")I defined two routes. The main route / serves the HTML template with the video tag, while the /video route streams the video.

The first step when handling the stream request is to parse the range header to get the start and end bytes so that we know which part of data to send. The part of the file or stream is also called chunk. I am using a chunk size of 1 MB as the default. Since the browser will send the first request with the range of bytes=0,, it is up to us how much data we will send. The browser will then repeat the file size with the following requests.

Once we know what to send, we load the video file with standard Python's open() function (rb indicates that we want to read binary data), point the iterator to the starting byte with video.seek(start) and read only the bytes we need with data = video.read(end - start).

Finally, we just need to construct a standard Response object with all the required headers. The status code 206 is like 200, but for partial content. The data themselves are passed simply as bytes. Two of the headers, Content-Length and Content-Type will be filled automatically by FastAPI.

If we run the server and open the browser, we will see the video being loaded in 1 MB chunks:

Last updated on 16.3.2021.